Block AI bots, scrapers and crawlers – a simple overview

Table of Contents

When it comes to blocking AI bots, scrapers and crawlers, you have a few different options, depending on your web platform and how aggressive you wish to be in your approach.

Below I list 3 possible solutions for blocking AI scrapers and crawlers: 1) block bots using robots.txt, 2) block bots using your web server, and 3) block bots using Cloudflare.

Each option comes with its own set of pros and cons, which I also outline below. As always, if you have any questions, just reach out and let me know. I’d be glad to help.

Block AI bots and scrapers with robots.txt

To block bad bots using robots.txt, add a list of user agents to the file, like shown below. The robots.txt file must be placed in the root folder of your website.

An up-to-date robots.txt for blocking AI bots can be found here.

User-agent: FakeBot

Disallow: /

User-agent: ScumBot

Disallow: /

User-agent: EvilBot

Disallow: /

Pros of using robots.txt to block AI scrapers

- Technically easy. Anyone can make a robots.txt file without having any technical knowledge

- Accessible to all web hosts. It doesn’t matter what web platform you are on, VPS, CMS, shared hosting or otherwise.

- Privacy friendly. Your traffic isn’t going through any 3rd parties.

Cons of using robots.txt to block AI scrapers

- Robots.txt is a voluntary protocol. This means that companies are under no legal obligations to comply with your robots.txt rules, and some scrapers ignore them.

- The robots.txt must be continually updated. AI companies often change the user agents names of their bots, retire some and introduce new ones.

What to do if the bots don’t comply with robots.txt

If the bad bots do not respect your robots.txt rules, you have a few options. You can move to server-side blocking or use a proxy solution such as Cloudflare (see below).

Or, if you are using WordPress, you can add the Blackhole for Bad Bots plugin to trap bad bots that don’t honor robots.txt.

Block AI bots using your web server

Server-side blocking of bots, scrapers and crawlers is much surer and more efficient compared with using a robots.txt file. The 3 most used web servers on the Internet today are Apache, NGINX and LiteSpeed.

Of these, the Apache web server is still the most popular on most hosting platforms, even though the performance of it is terrible (NGINX and LiteSpeed are far superior in terms of speed).

Block AI bots on an Apache or LiteSpeed web server

Apache, hybrid Apache + NGINX and LiteSpeed web servers all use the .htaccess file in the website root folder to configure options such as server access.

To block bad bots on your Apache server, add a list of user agents to your .htaccess file, like this:

# Block via User Agent

<IfModule mod_rewrite.c>

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} (EvilBot|ScumBot|FakeBot) [NC]

RewriteRule (.*) - [F,L]

</IfModule>

Block AI bots on a NGINX web server

NGINX web servers do not use .htaccess files, but instead nginx.conf (or Vhost files). To block bad bots on your NGINX server, add a list of user agents to your nginx.conf, like this:

if ($http_user_agent ~* (EvilBot|ScumBot|FakeBot) ) {

return 403;

}

Pros of using server-side blocking of AI scrapers

- Rules cannot be ignored. Unlike robots.txt, bots and scrapers cannot bypass any rules you have configured on your web server.

- Faster and less resource-intensive compared with robots.txt

- Privacy friendly. Your traffic isn’t going through any 3rd parties.

Cons of using server-side blocking of AI scrapers

- Requires access to the web server configuration on your hosting backend. If you are running your own VPS, this isn’t an issue. Also, some shared hosting environments allow the use of .htaccess to configure access rules.

- Requires more technical knowledge to set up than robots.txt. For server side blocking, you need to use the correct syntax, or it won’t work and could even take your website down.

Block AI bots and scrapers with Cloudflare

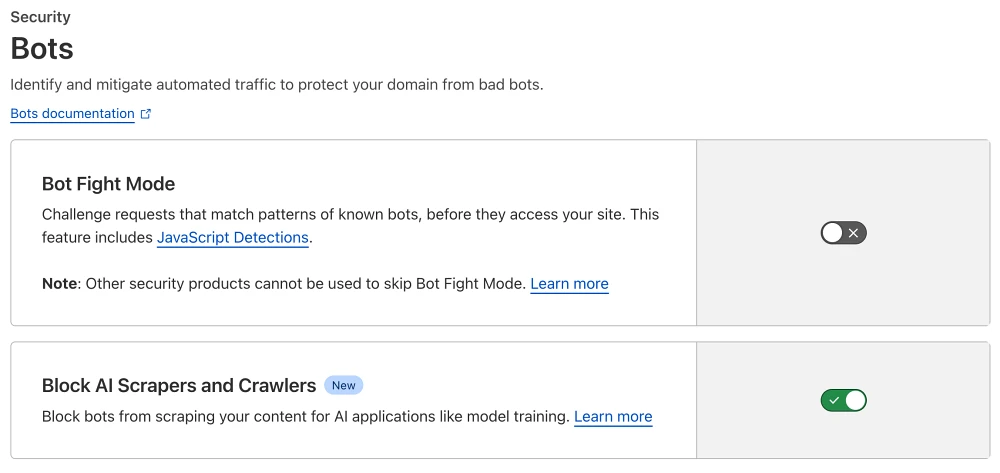

To block AI scrapers and crawlers on Cloudflare, follow the steps below:

- Add your website to Cloudflare (if it isn’t already).

- Go to ‘Security’ –> ‘Bots’ and activate ‘Block AI Scrapers and Crawlers’ as seen below:

Alternatively, if you wish, you can configure your own list of bots to block on Cloudflare. If you want to be even more aggressive in your approach, you can also block the networks the bad bots are hosted on.

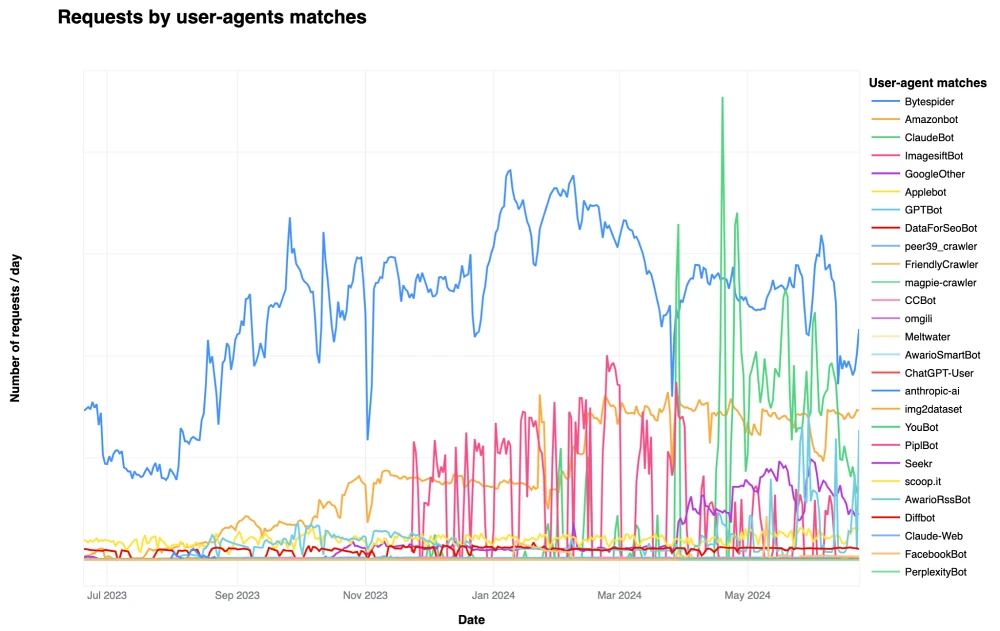

It is unclear exactly which bots are blocked by Cloudflare’s AI category, as they do not offer a complete and public list. But, I would expect it to block the bots shown in this illustration from their blog:

Pros of using server-side blocking of AI scrapers

- Easier to set up than server-side blocking. Cloudflare offers a single button setup to block AI crawlers.

- Bots never reach your server, as they are stopped on Cloudflare’s network. This means that you are not wasting any bandwidth or CPU resources dealing with the requests from bots.

Cons of using server-side blocking of AI scrapers

- Requires that you set up Cloudflare as your DNS server. This means that you need access to the nameservers for your domain and be able to change them to Cloudflare’s DNS servers.

- Many hosting platforms, e.g. Shopify, One.com, Wix, etc. do not allow you to use Cloudflare.

- All your data passes through a 3rd party (Cloudflare). Many large companies in the world trust them with their data, but if you don’t then you can’t use this option.

- Proxy server issues. Because Cloudflare is a proxy server, it masks the IP address of your web server, which can lead to some technical issues if you have a more complicated setup.

Caveat: blocking some AI bots may hurt your visibility on search engines

Blocking certain AI bots, such as PerplexityBot, YouBot, or OAI-SearchBot may lower your websites’ visibility on those companies’ search engines.

Blocking Amazonbot or Applebot may lower your chances of being included in voice search such as Alexa or Siri. Microsoft Co-pilot uses Bingbot as its user agent, so you can’t block Co-pilot without getting excluded from Bing search.

If you are concerned about this, stick only to blocking known AI scrapers. Sometimes this can be tricky, as it isn’t always clear how the different companies utilize their bots.

Below is a list of what I currently believe are purely scraper bots that won’t hurt your discoverability if you block them:

- anthropic-ai

- Applebot-Extended

- Bytespider

- CCBot

- ClaudeBot

- Claude-Web

- cohere-ai

- Diffbot

- FacebookBot

- FriendlyCrawler

- Google-Extended

- GPTBot

- ImagesiftBot

- img2dataset

- Meta-ExternalAgent

- omgili

- omgilibot

- Scrapy

- Timpibot

- VelenPublicWebCrawler

If you would like to outsource blocking bots

Many website owners may not want to waste their time messing with this. If you wish to outsource the blocking of bad bots and protection of your website, I’d be happy to help. Just reach out and we’ll find a solution that suits your needs.